Deploying a GPT 4o app to production: What we've learned

•

July 1, 2024

.jpg)

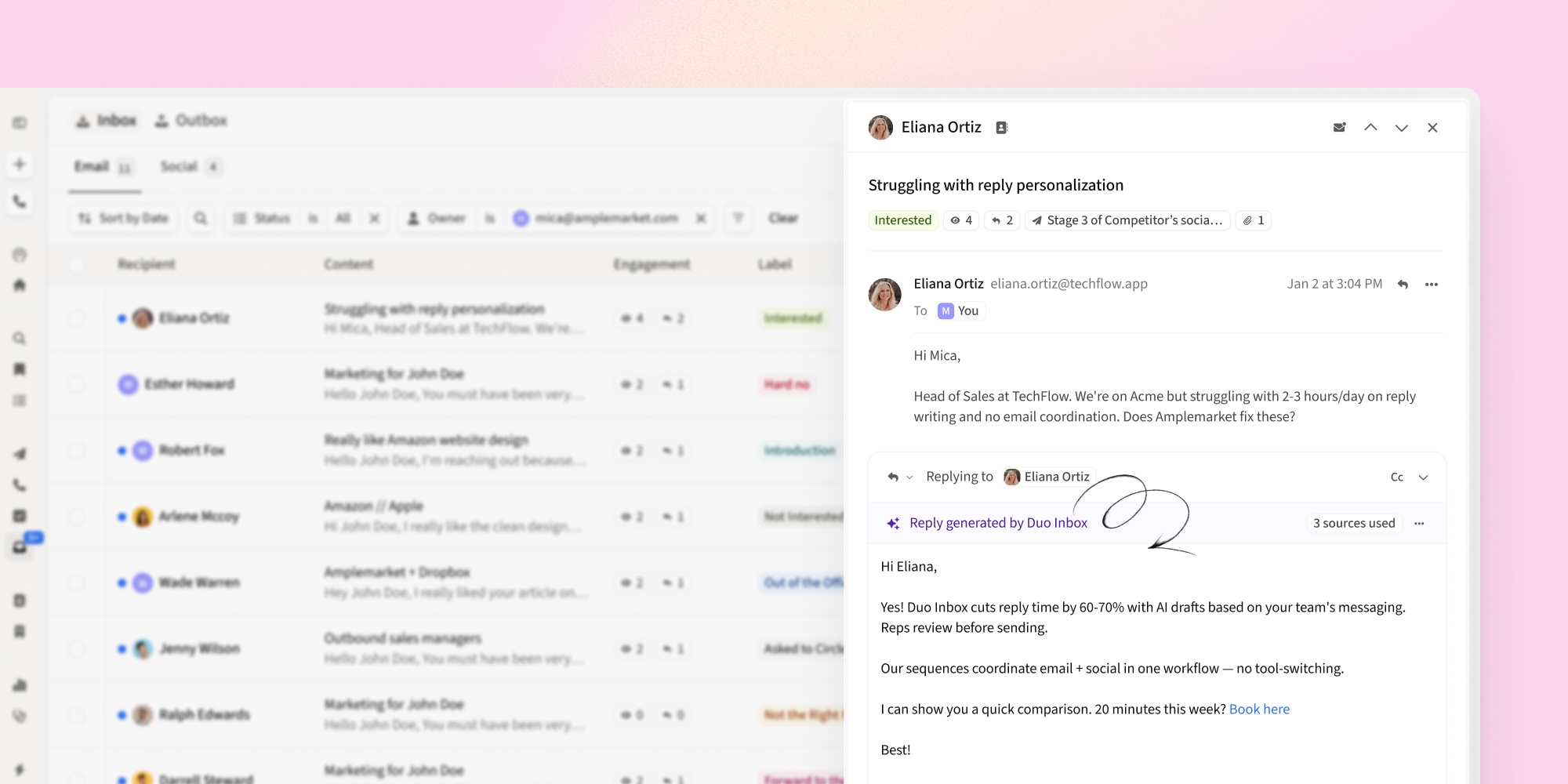

At Amplemarket, we envisioned a generative AI sales copy tool that helps our users write the perfect introductory message to leads in record time.

We've learned a lot about using GPT-4 in the process and in this post we aim to provide you with a head start on your own AI-driven adventures!

This is not an introduction to GPT-4 or the GPT family of models; for that you can, for example, read this blog post.

Writing a good prompt for a Chat GPT 4o app

GPT-4 was designed to interpret natural language and is already very powerful and versatile, so we suggest first focusing on crafting a high-quality prompt to achieve the fastest results. Other optimizations, like adjusting hyper-parameters or fine-tuning the model specifically for your task, are only necessary in certain situations.

GPT-4 outperforms previous GPT models in many ways, but it may not always produce the results that you want. This might be because it struggles to clearly understand the assigned task or the context provided. It's also susceptible to hallucinations in which it generates information that appears unrelated to the context provided.

To deal with these inaccuracies, it is recommended to write a prompt that is as concise, clear, and specific as possible, while avoiding excessive constraints on the model.

In the words of GPT-4 itself:

"Crafting GPT prompts is akin to a dialogue with a nascent mind: gently guide its focus, yet leave room for serendipity."

Providing extra information in the prompt can help reduce hallucinations. For example, adding the date to the prompt helps GPT-4 prevent time-related hallucinations. To minimize misunderstandings in a long prompt, you can divide your prompt into clearly labeled sections, each focused on a specific aspect of the task.

ChatGPT itself is also a powerful tool to generate good prompts, so do use it! It not only helps non-English native speakers write more natural prompts in English but also makes it possible to list multiple rephrasings of a prompt that can then be tested out individually. Often we can even start just with a vague sense of what we want to convey and using GPT iterate it into a well-crafted prompt.

If you are interested, here's a good guide for writing prompts with much more information.

Scaling up prompt testing for your GPT 4o app

There's an AI community out there ready and willing to collaborate on prompt testing. You can even crowd-source prompt experiments by making an easy-to-use app (for example, with Streamlit) that allows others to easily test their prompt ideas.

However, when transitioning to production, it's important to exercise increased caution and conduct more thorough testing. Even if you have generated many completions to assure yourself, GPT may still occasionally generate undesirable text. It can also be quite challenging to determine whether a revised prompt is truly an improvement over its previous version.

To address this, having a reliable testing framework that allows you to choose between different prompt versions is advisable. A simple way to achieve this is by creating a script that generates multiple solutions for various test cases using different prompt versions and then writes the output into a Google Sheet or a similar tool.

While using this framework may not catch all issues, it will at least identify some obvious ones. Additionally, it can be helpful to seek feedback from team members with more context or to have multiple people vote on which prompt version they prefer.

You might already be contemplating this, but when using generative models it is crucial to gather early feedback from real users. They are likely to encounter unique issues that you may have overlooked despite extensive testing. Having an effective feedback system in place and open communication channels within your team enables you to refine your prompts as those instances arise in production.

Moreover, leveraging a company-wide app like the one we previously suggested, which enables users to replicate reported issues and modify the prompts as needed, will greatly accelerate the problem-solving process.

Why users prefer transparent Chat GPT 4o apps

At times, early users may want more options to adjust the model's output. Addressing the most requested improvements will often help create a better product for general use, but you need to weigh their expectations against your vision for the product.

Moreover, if you are integrating data from multiple sources to generate text, users may struggle to comprehend the AI's reasoning behind a specific output and might assume it is simply fabricating the text. To address this concern, consider displaying the sources of information within the user interface, as exemplified by Bing Chat.

Managing OpenAI credits for your Chat GPT app

If, as we’ve suggested, you are using an internal prompt testing framework that generates multiple completions, utilizing an internal app with multiple people, or if you are already acquiring users, costs can quickly escalate.

Hence, it is important to monitor credits consumption and adjust the monthly limit as your app is growing. This is not only for financial considerations but also to avoid potential downtime caused by hitting the OpenAI maximum quota error.

Be aware that increasing this limit is a manual process and it takes some time for OpenAI to process the request and implement the change. Therefore, a spare API key from another account may come in handy in case you need to swap it out.

GPT-4's better reasoning capabilities, creativity, and understanding of a larger context may be crucial for your use case, but for more straightforward tasks a simpler and more cost-efficient model could prove equally effective.

For example, right now you can use OpenAI’s gpt-3.5-turbo, the model powering ChatGPT. It is still very powerful, but currently around 15 times cheaper than GPT-4!

Addressing speed issues in Chat GPT 4o apps

Text generation occurs incrementally, token by token, and can require several seconds when generating long content. What your users care about most is the delay between initiating the generation process (by, for example, pressing a button) and the appearance of text on the screen that allows them to start reading.

Bearing this in mind, consider from the outset implementing GPT’s streaming feature that allows for words to appear on the screen as they are generated, as in the ChatGPT website. Retrofitting this feature at a later stage may be hard!

Even without the streaming feature, you can still explore ways to improve your product's user experience. For some use cases, you might consider retrieving multiple OpenAI completions in a single request and caching the extra responses, which can be shown in the user interface if the first one falls short of the user's expectations.

Our learnings and results

While crafting good prompts is essential, there's much more to building a good product with GPT-4! Whether you're just starting to explore the possibilities it brings to the table or looking to improve your current implementation, we hope this post provides valuable pointers to help you successfully deploy an app that is based on this revolutionary new model.

If you're interested in seeing our work in action, check out our AI Copywriter!

.gif)

Subscribe to Amplemarket Blog

Sales tips, email resources, marketing content, and more.